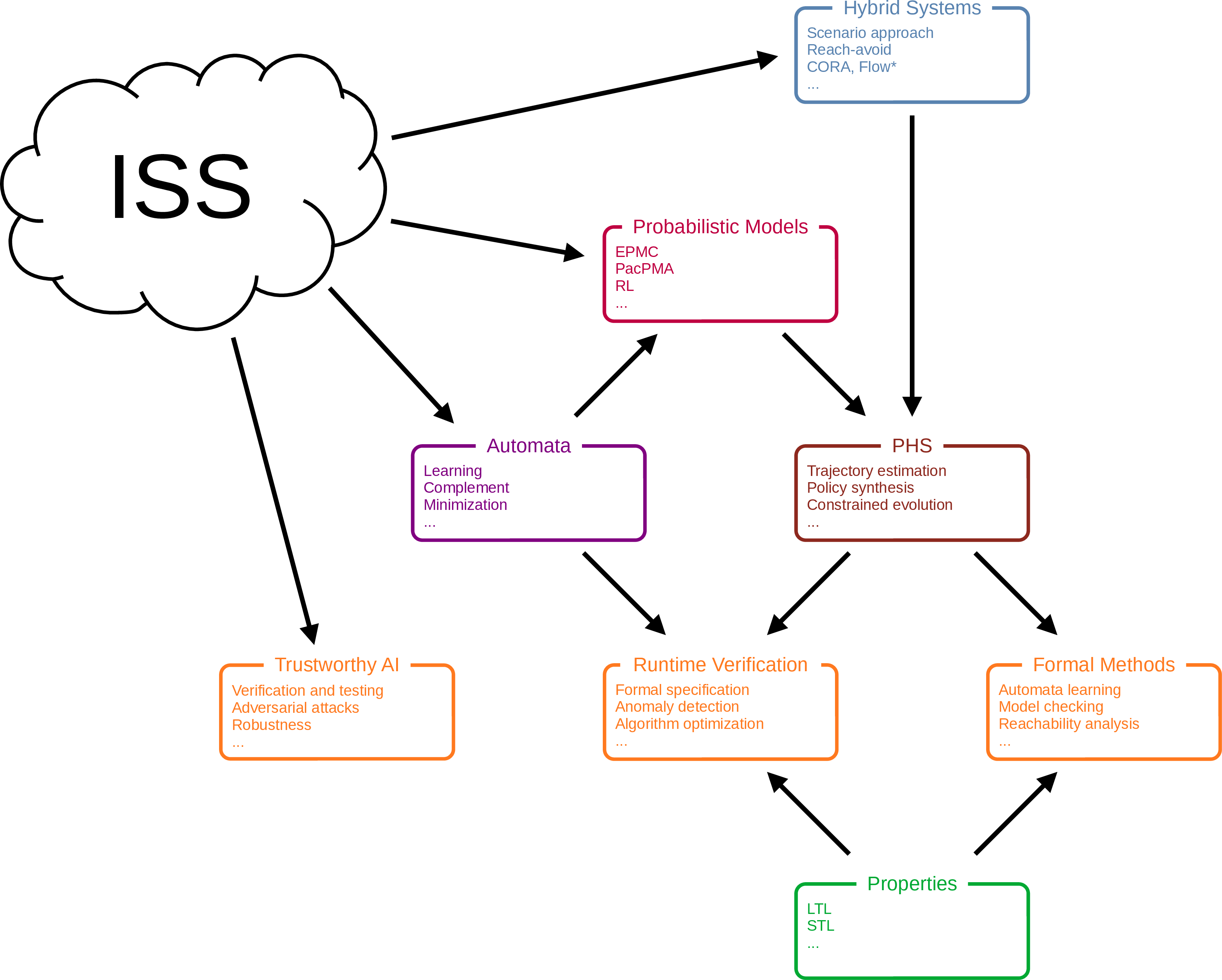

The ISS verification approach

To ensure the safety and robustness of autonomous driving systems on the ISS platform, our roadmap involves leveraging trustworthy AI techniques, runtime verification, and formal methods. Our plan is to obtain models from the ISS framework using automata learning techniques to derive automata models that capture system behaviors, or by modeling the systems as hybrid systems. For hybrid system models, we will utilize state-of-the-art tools such as CORA, Flow*, and PyBDR to perform reach-avoid analysis. For the automaton models, we intend to inject probabilities, such as failure rates, to create probabilistic systems like probabilistic automata, using our PacPMA tool. We will then apply our probabilistic model checker, EPMC, to verify the model against formal specifications, typically expressed in Linear Temporal Logic (LTL) or other temporal logics. Additionally, we plan to implement runtime verification to ensure continuous safety during operation.